Messing around with AI generated concept art

It can be difficult to get these algorithms to make anything good

I’m aware of the controversy around AI generated art — neural networks trained on effectively stolen copyrighted imagery or replacing actual artists with computer programs. While the former is genuinely illegal (or at least should be) if companies are trying to sell services or AI generated imagery, the latter seems unlikely because AI generated stuff is, well, crap if it’s not curated or modified by someone with a genuine artistic talent.

I can see it making a graphic artist’s job easier — filling in backgrounds with clouds, trees, or crowds. I like to think of it as using collage to plan architecture or a painting — in fact, most of the images below are collages of different elements. Nearly all of them are significantly modified. They needed to be. I used getimg.ai — using only a free account. It seems to be more directed at character generation, so not sure it was suited to tasks I gave it.

The first thing I wanted to do was generate a rolling green mountainous background for a memorizer complex as I just wrote the scene yesterday. The buildings are a reference to Borges’ The Library of Babel. This is part AI and part collage elements of a public domain diagram of columnar basalt:

I was entertained by the attempts to generate the entire image (these are unmodified):

I am about 20% of the way to the first draft and there’s a scene where the protagonist, Ian1, goes to one of those complexes. I didn’t use the text from the book for the prompt, but here it is:

A high-pitched whirring started, and the ionopter accelerated. A vast complex of hexagonal buildings came into view. It was difficult for Ian to judge the scale, but it rivaled the mountains on the peninsula.

After quite a bit of prompt engineering, the AI generated a decent image — or at least one that could be straightforwardly modified into a decent image — for another scene I am in the process of writing where a couple of (air-breathing) characters are being detained on the Uutaruu homeworld. It’s not just empty space around them in the scene, but the task of generating complex imagery is beyond the scope of the various AI algorithms — it already struggles with pretty simple prompts. It kept putting extra people in it (this one originally had three people in it) or making them romantically involved (lol).

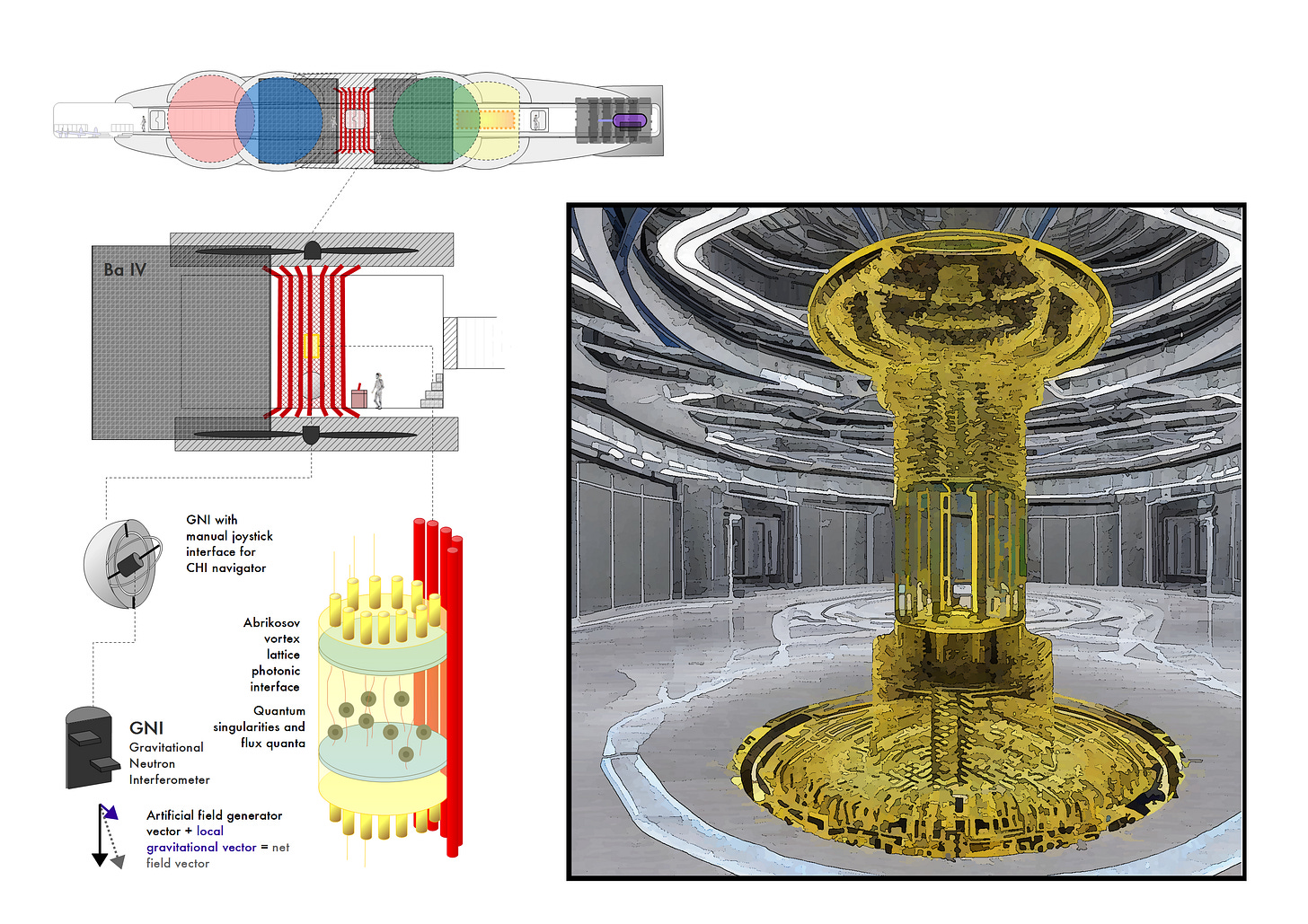

One of the things I did want to do was generate a non-IBM-owned image of a quantum computer for one of my cover art concepts. I’ve seen the things in labs enough over my career, but rarely can they be seen without a background of support equipment2 except in IBM’s marketing materials. This proved to be quite a difficult task, using up nearly all of my free tokens for the month and requiring a paragraph-level prompt.

With some work, I was able to make the hyperquantum computers look a bit more like how I envisioned for Inner Horizon with an added deliberate visual reference to the David Lynch film Dune (1984)3 that was always intended:

Here’s the description of the first hyperquantum singularity drive Ian sees:

The room was a large cylinder with an intricate organ of hundreds of gold-plated pipes and snaking bundles of wire at its center supported by organic formations of crystal. Booss chitin. Ian felt in his mind that it was an instrument playing complex electronica – ten different Bach inventions overtop ambient synth pitch-shifted into every octave. It was a marvelous noise.

Here’s the best AI-generated version of the engineering section of the Ulysses alongside the diagrams I made earlier:

I did find the image where the AI put a couple of benches in the room with the hyperquantum computer to be entertaining — like it’s in a museum.

I did try to generate an Uutaruu hyperquantum singularity drive (a la the kind that appears in a voyager class ship), but I guess there wasn’t much in the way of relevant training imagery. Mostly got things that look like pool equipment. These could be random Uutaruu technology:

Anyway, it was a fun challenge to try to make AI generated imagery match the vision in my head. I think artists’ jobs are safe unless someone wants to generate total trash.

Update

I had a few more credits (not sure getimg.ai keeps good track, lol) so I tried and failed to make the bridge of the Ulysses. A couple would be better suited to the flight deck of an ionopter.

This is the description of the protagonist walking on to the bridge of the Ulysses in the book draft:

The bridge was a palace of organic crystal melting smoothly into gleaming metal flight controls for every possible dimension. Threaded through various parts of the crystal skin, like arteries, were multi-colored bundles of wires and cables. And beyond that crystal, in every direction – above, below, and ahead – was space. The people walking and attending various consoles looked like they were floating in it. Ian felt like he might fall into it.

I had better luck with trying to generate memorizers — people in the 24th century who act as repositories of trusted data. Since almost any digital data can be hacked, all of it is memorized by memorizers. Ubiquitous gene editing using CRISPR by wealthy families in the early 21st century to have “optimal” children was successful at producing people with extraordinary memories. The cost was that while they are extremely intelligent, they are barely conscious (aka “philosophical zombies”) and prone to other genetic disorders. They are tall, practically models, but also generally lack hair and have extremely large eyes (none of the AI algorithms could generate that aspect with a human-looking face).

Memorizers are in the book as I wanted an example that separated the idea of intelligence from the idea of consciousness (also as a warning about genetic engineering and founder effects which plague various communities that separate themselves from the rest of humanity). The visual inspiration was both Mentats from Dune and the “humanoids” from The Black Hole (1979):

Along with a total failure with regard to the large eyes, it also had a problem with the lack of hair — “bald” as a prompt generated mostly old men and any other version (e.g. “no hair”) produced … people with hair. One of those was useful in that it produced a reasonable version of an Orion Union minister or member of parliament:

I imagine the better performance with regard to people is due to getimg.ai’s focus on character creation.

Update #2

They keep giving me more tokens, so I’ll keep messing around. This time, I tried to make some ships. This was almost uniformly garbage. (Note — I am applying my own homebrew version of the “Sable” filter on most of these images to create a uniform “look”.)

This is the best of several tries at making the Ulysses. I vastly prefer my own diagram version.

The best version of the Bellatrix wasn’t terrible, but the Uutaruu Voyager-class ships were all weird. There’s a couple of the best examples below.

The one bright spot was generating Kamiser landing pods (it couldn’t generate a ship, but a ship is basically a bunch of landing-pod-like components attached together). However, their simplicity and specificity given the prompt (“geodesic sphere”) likely made the job much easier. I’ll show these as getimg.ai produced them:

I mean my initial concept “collage” (and my scanned sketch in my notes) isn’t that hard to make:

AI generated art for science fiction might be useful for non-artists to generate ideas that they can pass to professional artists to say “something like this” — but on the whole it is not really good at making things for which analogs don’t already exist. Geodesic sphere? Yes! Giant hexagonal buildings in the mountains? No! People looking at touch screen displays? Sure! A toroidal quantum computer inside a ship filled with water? Nope!

You mileage may vary, and sure, more complex neural nets will probably get somewhat better. However, the current instantiations seem best suited for character creation of human-looking characters.

Update #3

This one actually kind of works for me (although I did replace the sky with a NASA image) — a scene I just wrote that takes place in the Djorgovski 2 cluster. It’s an Intergalactic System conference center. These buildings on a Mars-like planet near the center of the galaxy (hence thousands of times more stars in the sky along with illuminated gasses) are 90 million years old:

The excerpt:

The sheer number, brightness, and density of stars in the sky lit the surface far more than even the brightest full moon. The conference building looked extremely minimal on the exterior – a reddish stone cylinder that largely matched the surrounding rock. It was just one of several cylinders of varying sizes around a dusty courtyard inlaid with multiple different modes of navigation: carved paths, green lights, wires, periodic stones that gave off a pleasant low frequency hum, among others.

Update #4

I’m on bluesky now (@newqueuelure), and I mentioned something there about how AI generated stuff can create a lot of content. The big problem is that in order to bring any sort of human soul back to the material requires a human touch — with “curation” being the most viable option. Basically, a human sifting through hundreds of images to find the “right” ones. However, as I’ve discovered through my adventures in this post, this not only does not save a lot of time but requires at least some aesthetic talent.

This doesn’t mean content factories will not go through a phase of churning out trash (already underway, it seems) — but only that it may not turn out to be as economically viable a replacement for real artists as the tech bros seem to think. Large compute resources generating thousands of images that have to be sifted through by a person (or a team) charged with the aesthetic doesn’t necessarily sound more efficient than just hiring an artist. But maybe that’s me being optimistic.

Still, I had a pretty simple vison of a futuristic car — it took 50 or so images to come up with six candidates of which one (upper left) is decent:

In the book draft (I just focused on the car in the evening light):

There was a sleek road of solar panels catching the dying evening’s rays heading from the gate into the white light of a massive city in the distance. A car with a transparent body, reminiscent of a water drop rolling down a window, lit up as Tenne and Nico approached.

There was a pretty bad failure to generate anything like an ionopter. In the book draft:

In the middle of the pad was a ship. It was nowhere near as sleek as the car — a thick tube with thin wings. At the end of each wing it had a large nacelle with large propellers canted at an angle. Ian was surprised. “Thought things would be more futuristic in the outsider world.”

“It’s incredibly energy efficient,” says Tenne.

Here’s what getimg.ai came up with:

The lower left image is probably best for the body, but nothing could produce something with propellers without making it look like either gundam or the 1930s — at least with generating only 50 images or so. The lower right image prompted me to try to make the Àgbùfọn:'

My guess was that this was somewhat more successful (somewhat, as I still had to cull a dozen or so images) because the Àgbùfọn is based on a drone taxi — something for which there are thousands of source images of concept art as well as some prototype vehicles. In the Inner Horizon universe, it’s a bit like Back to the Future making a time machine out of a DeLorean or Star Trek making the first warp drive out of an ICBM — a grad student in the 2100s makes a hyperquantum space ship out of an old high altitude drone taxi from the 2050s.

I continued to be entertained by making these, but not sure I would ever use them for any real work except to say to a real artist (as I mention above): “something like this”. The strike rate for usable concept art for things that don’t yet exist is like 1% (or less if one’s standards are higher), so not sure it is worth the time to do anything more than initial explorations.

Excerpts from Inner Horizon © Jason Smith

This name could change. It was originally chosen as a placeholder based on a 90s musical reference (the singer of the Stone Roses), but alternately I’ve thought of Ian Macleod (aka brutalmoose) and Ian Goodfellow (co-inventor of Generative Adversarial Networks, GANs, a machine learning algorithm). ETA 8/3/2023: Now also thinking of JK of BTS.

I mean you need something to interpret the signals from the qubits. And coolant.

The throne room of the Emperor, to be specific:

As a child, I was hit in quick succession by The Black Hole (1979), Star Trek: The Motion Picture (1979) (saw them on TV a bit later), The Empire Strikes Back (1980), Star Trek II: The Wrath of Khan (1982), Return of the Jedi (1983), and Dune (1984) with the last one making me realize that science fiction could be weird, prompting me to read much more of it (I had been mostly reading non-fiction or fantasy like Lord of the Rings).